Abstract

ML Skills: - Data pre-processing and labelling. - Apply and implement linear regression model, non-linear regression model, K-nearest neighbour, tree-based methods, random forest, SVM and neural network machine learning models. - Evaluate the performance

of different machine learning models using different types of error functions. - Experienced in using sci-kit-learn and Tensorflow libraries in python to implement machine learning models.

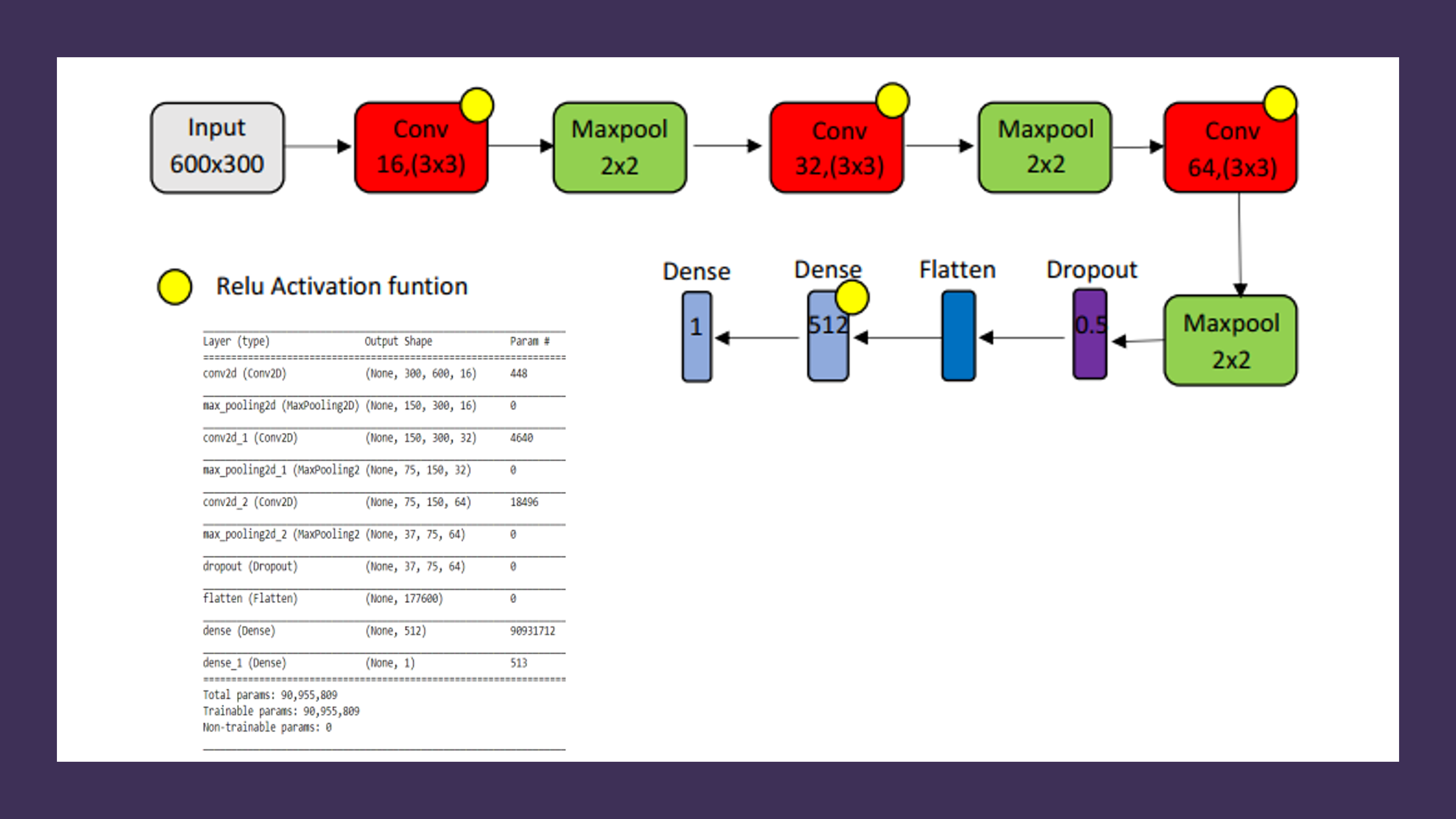

The CNN network architecture was designed based on the AlexNet model with three convolutional layers, and each convolutional layer is followed by Max pool layers. Following that, two fully connected dense layers were connected after a dropout regularization.

Each convolutional and dense layer is activated with the Relu activation function except the last dense layer. The last dense layer has SoftMax to output the binary value. The ReLU (rectified linear unit) was used as an

activation function to feature map. It supports increasing the non-linearity in the network. This is because images are highly non-linear. Also, it can train the network faster without any significant penalty to generalization

accuracy. It improves generalization by randomly skipping some units or connections with a certain probability.